This blog outlines how to identify evidence-based practices from those that lack an evidence-base, or those that have a poor evidence-base. It will focus on quantitative research, also referred to as positivist, empirical and scientific research, which is based on numbers. Quantitative research is the best approach if the research question can be answered with numbers. Therefore, research on skills-based learning often uses quantitative research as this is something that can be measured numerically. The results can also be easily communicated to others as numbers can be transferred into graphs and charts.

However, qualitative research, which uses words rather than numbers, is equally important and complements quantitative research. As well, qualitative research can spark interest in a quantitative research project. Qualitative research can provide an answer to questions on motivations, perceptions and meanings rather than cause and effect. It is an important part of teaching students with disability as they rarely have a voice within research as the literature is dominated by professionals, and a qualitative approach is the best way of capturing their viewpoint. Qualitative research can provide answers for concerns not captured within quantitative research. An example of the contrast between quantitative and qualitative research can be seen in the literature on Applied Behaviour Analysis (ABA). Evidence obtained from quantitative approaches largely consider ABA to be an effective approach to understanding and changing the behaviour of students with autism (see the Raising Children Network for more information). Yet within qualitative research, people with lived experience of disability have voiced concerns about the use of painful aversives utilised in ABA to remove autistic behaviour and consider this to be abusive (see Kirkham 2017). Therefore, it is vital to have an understanding of what works best for most students, which can be obtained through quantitative research, but to balance this information with understandings obtained by engaging with people’s accounts of their experiences, which is located within qualitative research. Qualitative research is highly valuable, and will be addressed in another blog.

In order to provide clarity and consistency, the definitions of the following terms utilised in this blog are provided:

Evidence-based means there is a substantial level of high-quality, rigorous, systematic research demonstrating the activities or programs lead to improved outcomes for students.

Research-based means that the practice is based on principles that research have found to be effective. However, it does not mean the practice itself has been researched to determine specific outcomes.

Best-practice is an overused term with various meanings, but is largely used to describe “what works” in a particular context.

Fidelity refers to the implementation of a practice without variation from the way it was designed to be implemented.

Evidence-Based and Research-Based Practice

We live in an era where evidence, statistics and facts are often overridden by personal opinion and experiences. This can be partly attributed to the proliferation of information available on the internet. But not all information is created equally, and when personal opinion masquerades as evidence-based research, it undermines the education of all students, particularly those with disability who require sound and robust pedagogies. While there is a strong uptake of evidence-based practice for teachers of students with disability, disturbingly, almost half of these teachers utilise practices with a poor evidence-base at least weekly or more, although at a much lower level than evidence-based practices (Carter, Stephenson & Strnadova 2011).

Evidence-based teaching involves the use of evidence to:

- Establish where students are with their learning (e.g. using observation, standardised testing, etc)

- Decide on appropriate teaching strategies and interventions

- Monitor student progress

- Evaluate teaching effectiveness

(Masters 2018)

Teachers should, in the first instance, select assessments, resources, teaching strategies and programs with high probabilities of success based on available evidence. Evidence-based decisions, assessments and instruction refers to practices that have been shown by independent, verifiable, quality research studies to be effective (Cook & Odom 2013). The term “researched based” is often used as a synonym for “evidence-based”, but instead it refers to decisions, assessments and instruction where the practice is derived from research, but has not been independently researched to ascertain whether it leads to positive outcomes for students. Hence, evidence-based practice should be the first choice, followed by research-based practice.

It is obvious that teachers want positive outcomes for students as they put considerable time, thought and energy into developing teaching programs. However, it is challenging for teachers to identify substantial, quality research from a trustworthy source, determine which evidence-based approach is suitable for students in their context and translate this into their classroom with fidelity. The gap between the evidence and classroom practice occurs due to many variables including the teacher’s professional judgement and expertise, characteristics of the learner, as well as input from the students and family and the team which may include therapists, counsellors and psychologists. Therefore, teachers need to determine not only what works, but what works for their students. At the same time educators should avoid instructional practices that are described within the literature as ineffective or those that have the potential to cause harm. Some instructional practices may not be harmful within themselves, but if they have a neutral effect, they can deter participation in evidence-based programs that are effective.

What to Look for in Evidence-Based Research

Understanding what research is evidence-based is essential when developing teaching programs, especially for students with disability who cannot afford to have time wasted on ineffective programs. There are a number of checkpoints when determining if an approach is evidence-based.

- Peer Review Process

Has the author had their work published in a high-quality, academic journal (see next point for further explanation) through the peer review process? The peer review process is the evaluation of research by qualified members of a profession who have expertise in the field. It is a form of regulation and is used to maintain quality standards, improve performance, and provide credibility. The process of peer review plays a major role in building a broad-based agreement or consensus. Consensus does not mean that researchers do not continue studying a particular idea, but rather that given the information available at the time, the researchers agree to a set of facts. These facts can be challenged if new data, methods, observations or facts come to light. Consensus is a way of tapping the collective wisdom of a group of experts.

The absence of peer review should raise serious concerns about the research and the conclusions being reported. If research is ‘in-house’ and has not been through a peer review process, then the research may be questionable.

2. Journal Quality

Research should be published in both national and international quality journals. The measure of journal quality is considered by many to be the Journal Impact Factor (JIF). The higher the impact factor, the greater the quality of the journal. The impact factor is used to compare different journals within the field and is based on the number of citations. An impact factor of 6 or more is considered high. However, not all journals have an impact factor. For example, the Australasian Journal of Special and Inclusive Education is relatively new, and as yet does not have an impact factor. The impact factor can usually be located through the journal website.

3. Referencing

The paper should have extensive references, both in-text and at the end of the article, to past research. Recent relevant references of the past 3-5 years is a good indicator of a reputable article. Research changes rapidly, so if a paper relies on dated references, it is a cause for concern.

4. Funding

Is there a conflict of interest regarding funding for the research? Has the research been funded by an organisation? Does the organisation have an agenda to promote such as the sale of commercial programs or professional development? For example, research on the Arrowsmith program (a controversial program for students with learning difficulties) is not independently funded, but receives an injection of funding by the Arrowsmith school. This suggests that there may be a degree of bias in the report, especially since the outcome of the research is not consistent with other independently funded studies. Hence, when research is not independently funded it requires an extra level of scrutiny. Also, in order to be able to attract funding, the researchers must be able to justify their project to an independent party who has no vested interest in the outcome.

5. Qualifications and background of the author

It is important to ascertain if the author has academic credentials in the field they are researching. A PhD is the preferred qualification, although many strong researchers do not hold this qualification. The author should also be connected to a credible university or academic institution or unbiased reputable organisation. Another means of checking on the author is through the H-index which is a numerical indicator of the quality and quantity of publications. For instance, according to Researchgate, Professor John Hattie has a H-index of 61. This is an exceptionally high result as a strong H-index is approximately 20.

6. Statement of Limitations

The limitations of the research are those characteristics of the design or methodology that influenced the interpretation of the findings or impact on the transferability of the research. Some studies may state that further research is required to address the limitations. The statement of limitations provides an indication of whether the research can be utilised in your context. For example, research may only be trialled with a specific group of students, such as students with autism and therefore the results cannot be generalised to other groups of students, such as those with a diagnosis of language disorder. Another example is when the research has only been trialled within a clinical setting, so it is not generalisable into a classroom situation.

7. Experimental control and replication

Replicating research to ensure the results can be generalised to the larger population is an important component of the evidence-based process. Therefore, it is important to ask if a range of people trialled the test, program or intervention and found that it works.

Experimental control refers to accounting for and excluding alternative explanations for the change in student outcomes, that is explanations other than students’ receiving the educational practice being tested. Factors such as socio-economic status, the prior learning environment and bias, that is selectively interpreting or ignoring unfavourable data, are variables that need to be considered and controlled. It’s also important that outcomes are monitored over time.

8. Ethical considerations

There are ethical considerations when conducting research. Studies should be assessed by an ethics committee to ensure that research subjects are not coerced into participating in a study, confidentiality is maintained and the risks of the research are minimised while the benefits maximised. Students with disability are a vulnerable population and require special consideration as they have a long history of experiencing human right abuses. It is important that researchers do not exploit students for their own personal gain.

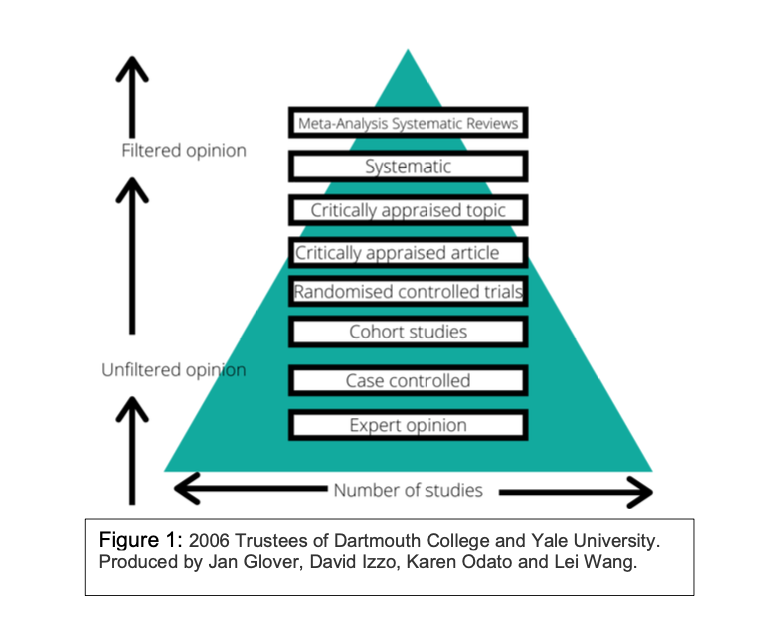

9. The levels of evidence

Evidence for a particular program, strategy or idea may come in many different forms:

- Expert opinion: Experts in a particular field may offer an opinion on how well an intervention may work. This opinion may be based on evidence from research they have done or read, but it may also be influenced by personal biases such as beliefs, opinions or politics. It is important that when listening to expert opinions, a person should engage critical thinking to decide if an opinion should be considered. Some questions to ask include: Is the expert’s expertise relevant to the topic being discussed? Does the expert provide evidence for their opinions? Is there consensus in the opinions of different experts on the same topic? Does the expert have conflicting interests that may bias their opinion?

- Case controlled studies: These usually involve the participation of one or only a few test subjects. The participants are administered an intervention and this may sometimes be contrasted with another person or small group who has not had the intervention. These studies may not be reliable as they involve a small number of participants, the participants may not have been chosen randomly or other variables that may impact on the results have not been considered.

- Cohort studies: These involve larger groups of people over longer periods of time. These may also include another group of people who did not receive the intervention, similarly monitored over the same period of time. The larger numbers of participants mean that the results are more reliable and are able to be generalised to other people who did not participate in the study. However, participants in these types of studies are often chosen by the researchers and this may introduce bias which impacts on the reliability of the outcomes. It is also difficult to make sure that other variables that may impact on the outcomes are controlled.

- Randomised control trial (RCT): In these types of studies, large numbers of participants are assigned randomly to the intervention group or non-intervention group. The participants may be assigned “blindly”, which means the researchers do not know who the participants are or which group they are in. A large double blind (i.e. participants don’t know the researchers and researchers don’t know the participants) randomised control trial is the strongest, most reliable type of study that provides evidence for the effectiveness of an intervention.

- Critically appraised article: Here, experts in a particular field will provide a critical analysis of a specific article published by another researcher. The analysis provides information about how a particular piece of research was conducted and what conclusions may be drawn.

- Critically appraised topic: Here, experts in a particular field will provide a critical analysis of multiple research studies within a particular topic. The analysis provides an evaluation and synthesis of these pieces of research and provides a summary of conclusions.

- Systematic reviews: These involve the comparison of results of all available reliable studies for a particular intervention. Researchers who conduct systematic reviews will also provide appraisals for the studies they collected for the review. These appraisals will involve rationales to explain why studies are added or not added to a review process.

- Meta-analyses: This is where statistical methodologies are used to calculate the effects of an intervention across multiple studies. Systematic reviews that have gone through a process of meta-analysis are considered the strongest and highest quality of evidence.

These have been displayed on the following diagram, showing their placement in the hierarchy of reliability of evidence. The higher up the evidence pyramid, the more the number of studies available and the more reliable the information. Importantly, some types of evidence are critically analysed (filtered) by experts in the field and others are not. When evidence has been critically appraised by an external expert it provides an extra layer of reliability to the evidence.

10. Claims about the effectiveness of the approach are substantiated

Beware of language that is highly technical and purposely chosen to mislead. For example, Pamela Snow (2015) points out the misleading marketing utilised for the Arrowsmith program which draws on the following terms to mislead people into thinking it is a rigorous, scientific program linked to neuroscience, when it is not:

cognitive

neuro

brain-based

neuronal

synaptic

neural sciences

cognitive-curricular research

brain imagining

targeted cognitive exercise

neuroplasticity

Also, the heavy use of testimonials in the absence of other indicators of evidence is another cautionary sign. Scrutinise testimonials as these may be carefully chosen so only positive reviews are included and negative comments are removed.

11. Theory Fidelity

Intervention programs are often developed based on a theory, or a way of thinking. When the program has been thoroughly researched and shown to be effective, its effectiveness in the classroom will be heavily dependent on a teacher’s understanding of the theory that underpins the program, and fidelity to conducting the program in the way the developers intended.

12. Resources

When evidence-based approaches to instruction are ignored it sends students on a trajectory away from achievement. All students, including students with disability, require carefully structured explicit, systematic instruction in order to gain academic skills. It should also be noted that students with disability should follow the same curriculum as their non-disabled peers, with adaptations and modifications as needed. The following resources outline evidence-based educational research from local and international findings.

Deans for Impact

https://deansforimpact.org/

Education Endowment Foundation. (2019). Evidence for Learning Teaching & Learning Toolkit: Education Endowment Foundation. Retrieved from https://evidenceforlearning.org.au/the-toolkits/the-teaching-and-learning-toolkit/

Evidence for Learning in collaboration with Melbourne Graduate School of Education. (2019). Australasian Research Summaries. Retrieved from https://evidenceforlearning.org.au/the-toolkits/the-teaching-and-learning-toolkit/australasian-research-summaries/

Education Endowment Foundation. (2019). Evidence for Learning Early Childhood Education Toolkit: Education Endowment Foundation. Retrieved from https://evidenceforlearning.org.au/the-toolkits/early-childhood-education-toolkit/

Evidence for Learning in collaboration with Telethon Kids. (2019). Australasian Research Summaries. Retrieved from https://evidenceforlearning.org.au/the-toolkits/early-childhood-education-toolkit/australasian-research-summaries/

Great Teaching Toolkit

https://www.greatteaching.com/

Raising Children Network

https://raisingchildren.net.au/autism/therapies-guide

What Works Clearinghouse

https://ies.ed.gov/ncee/wwc/

Conclusion

Teaching is a highly skilled and complex activity. In order to demonstrably make a difference to students’ learning outcomes, educators should draw on evidence-based practices in the first instance. However, determining whether a practice is evidence-based is challenging as it takes time to locate, read and unpack evidence. Therefore, the resources listed within this blog are an introduction to high-quality evidence-based research that is presented in an easy to read format.

References

Carter, M., Stephenson, J., & Strnadova, I. (2011). Reported Prevalence by Australian Special Educators of Evidence-Based Instructional Practices. Australasian Journal of Special Education, 35, 47-60.

Cook, B.G. & Odom, S.L. (2013) Evidence-Based Practices and Implementation Science in Special Education in Exceptional Children Vol. 70 Issue 3

Kirkham, P. (2017) ‘The line between intervention and abuse’- autism and applied behaviour analysis in History of the Human Sciences Vol. 30 Issue 2

Masters, G. N. (2018) The role of evidence in teaching and learning. Teacher, 27 August 2018. Retrieved from https://www.teachermagazine.com.au/columnists/geoff-masters/the-role-of-evidence-in-teaching-and-learning

Snow, P. (2015) Why not everyone is enthusiastic about the Arrowsmith Program http://pamelasnow.blogspot.com/2015/05/why-not-everyone-is-enthusiastic-about.html

This blog was co-written with Sim Dhaliwal (speech pathologist).